Big Omega

The big Oh notation considered in the previous lesson is the most widely used asymptotic notation in computer science. The reason is that when designing a software system, we want to be sure that the algorithms we choose will be fast enough to solve our problems at the required input sizes, so we need to know some upper bound on how fast the program's running time $T(n)$ will grow as input size $n$ increases — and if we manage to prove that $T \in O(f)$, where $f$ is some function which doesn't grow too fast, then this is the kind of upper bound we need, since $T \in O(f)$ means that our algorithm's running time $T$ is guaranteed to grow no more rapidly than $f$ (for large inputs and up to a constant).

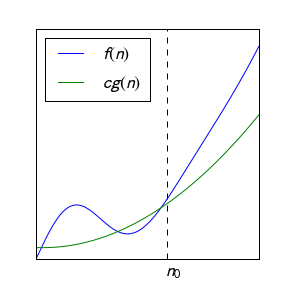

However, sometimes we are interested in the lower bound of an algorithm's running time, i. e. we would like to express the statement that the running time $T$ grows no less rapidly than some function $g$. An example is an encryption scheme: to feel safe when our documents are encrypted, we want to have some guarantee that a hacker, not knowing the password, will spend on average at least time of the order of magnitude $g(n)$ to break the encryption by brute-force attack, where $n$ is the length of the password and $g$ is some function (one can say that an encryption scheme is good if one can prove this for a function $g$ which grows rather fast). This calls for another asymptotic notation: the Big Omega.

An example of a statement involving $\Omega$ is about the complexity of sorting functions. It can be shown that any sorting algorithm (that makes black-box comparisons) needs to perform at least $\log n!$ comparisons for sorting a list of $n$ elements.1) Using big Omega notation, we can just write: any sorting algorithm has a worst case number of comparisons of $\Omega(\log n!)=\Omega(n\log n)$. (The last equality can be shown using the Stirling formula.) We cannot write $O(n\log n)$ here, because there are some sorting algorithms that take much more than $n\log n$ comparisons (e.g., bubble sort).

Analogously to Big Oh, for determining whether $f \in \Omega(g)$ it is often useful to consider the ratio of the functions. Namely, the following criterion holds.

The notions Big Oh and Big Omega are also tightly related:

All of the properties listed in Lemma (Some properties of big Oh) also hold for $\Omega$ instead of $O$ with small modifications. What are the modifications that need to be done in the lemma to make it hold for $\Omega$?

Alice tells Bob: I developed a new sorting algorithm with running time $\Omega(\sqrt n)$. Bob is not impressed. Why?