Big Oh

In this lesson we will introduce our first asymptotic notation: the Big Oh, which can be used for expressing an upper bound for an algorithm's running time.

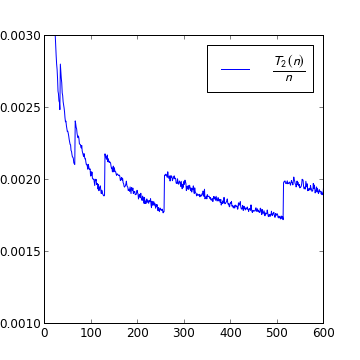

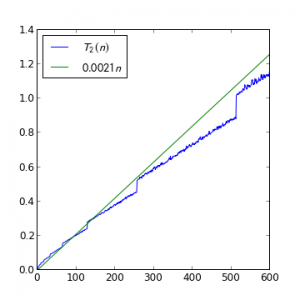

In the previous lesson The need for asymptotic notation: a case study we proved theoretically that for sufficiently large $n$, the running time of the second algorithm satisfies $T_2(n) < dn$ where $d$ is some constant. Here is the figure of measured running times again:

As said in the previous lesson, the figure confirms that we didn't make a mistake when calculating the theoretical upper bound: for example, from the figure one sees that $T_2(n) < 0.0021n$ if $n > 150$.

As said in the previous lesson, the figure confirms that we didn't make a mistake when calculating the theoretical upper bound: for example, from the figure one sees that $T_2(n) < 0.0021n$ if $n > 150$.

Why bother with theoretical analysis at all if one can measure running times in practice? Well, sometimes we want to have a rough estimate on the running time to decide whether to implement an algorithm at all; estimating worst-case running time (as opposed to average running time) based on measurements only is also not reliable (there could be some possible inputs taking a very long time but it might happen that we don't hit any of them in our benchmark). It can be quite hard to theoretically make claims with explicitly computed constants like “$T_2(n) < 0.0021n$ if $n > 150$”, but it is much easier to prove weaker statements like “for sufficiently large $n$, $T_2(n) < dn$ where $d$ is some constant”, which still give a rough idea. In computer science, such weaker statements are used so often that a shorter way for writing them has been invented: instead of “for sufficiently large $n$, $T_2(n) < dn$ where $d$ is some constant”, one writes \[T_2 \in O(n).\] This is what is called the Big Oh notation.

Before going to the general definition of Big Oh, let us note that in this and the following lessons, we will only talk about positive functions, i. e. functions whose values are positive real numbers. This is in order to simplify our definitions and properties of asymptotic notations a little. We will also assume that the function arguments are natural numbers. We will not demand the function to be defined and positive on all natural numbers, but only starting from some point (like $\log n$, which is defined and positive for $n \geq 2$). The function we are interested in will usually be the running time or memory consumption of an algorithm for given input size, which obviously satisfies these requirements (its value, i. e. running time or memory usage, is a positive real number, and the argument, i. e. the input size, is a natural number).

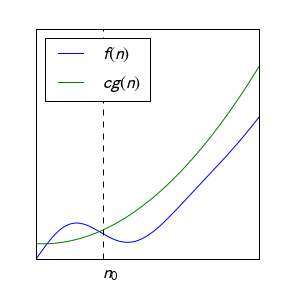

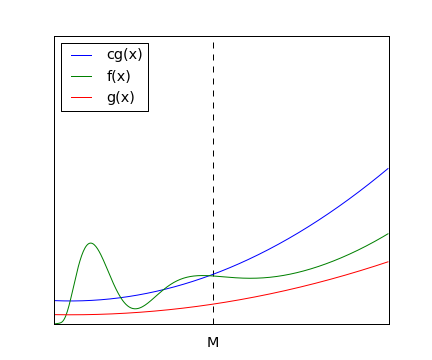

We will sometimes also use the phrase sufficiently large: when saying “$P(n)$ for all sufficiently large $n$”, we mean “there exists $n_0$ such that $P(n)$ for all $n \geq n_0$”. So the definition of Big Oh can be rephrased like this: $f \in O(g)$ if and only if there exists a constant $c \geq 0$ such that $f(n) \leq c g(n)$ for all sufficiently large $n$.

The Big Oh notation has two characteristic properties:

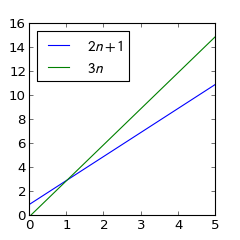

- it ignores the values of $f$ and $g$ for small $n$ and

- it also ignores constant factors: if, e. g. a function $f$ is linearly bounded, i. e. $f(n) \leq dn$ for a constant $d$, then we can simply write $f \in O(n)$ without factor $d$.

If $f(n)$ is the running time of an algorithm for input size $n$, then this is usually indeed the most important information one needs about the algorithm's performance:

- one really does not care how a running time behaves if it is sufficiently small;

- multiplying the algorithm's running time by a moderate constant will not dramatically change the maximal input size for which the algorithm is usable — in contrast, replacing it with a more efficient algorithm can give an improvement of several orders of magnitude1).

The Big Oh is the oldest in a family of mathematical notations, called asymptotic notations, which can be used to express how a function behaves when its argument grows to infinity. The Big Oh was taken into use by mathematician Paul Bachmann in the end of the 19th century, but is sometimes called the Landau symbol for another mathematician, Edmund Landau, who soon adopted it (the name Landau symbol is actually also used for a related asymptotic notation, the little oh; the latter was indeed introduced by Landau). For analyzing the performance of algorithms, the Big Oh has been popularized by the great computer scientist Donald Knuth, who also invented some other asymptotic notations (like the Big Omega, which we will meet later). Following Knuth, the Big Oh should actually be called the Big Omicron (a Greek letter that looks like the Latin O) — but most people still use Oh since this is easier to say and type.

1. Ways to estimate asymptotic complexity

Given two functions $f$ and $g$, how to determine whether $f \in O(g)$ or not? We have already done it, using straightforwardly the definition of Big Oh, for some pairs of functions (we proved that $T_2 \in O(n)$ and some more such statements in the exercises), but actually there is a general approach which turns out very useful: this is to look at how their ratio $\frac{f(n)}{g(n)}$ behaves. Indeed, $f \in O(g)$ means that there exists some $c$ such that $f(n) \leq cg(n)$ for sufficiently large $n$; dividing this inequality by $g(n)$ (and recalling that we assume $g(n) > 0$), we get an equivalent inequality $\frac{f(n)}{g(n)} \leq c$. So we get the following criterion:

For example, let's check whether $T_1(n) \in O(n^2)$ where $T_1(n)$ is the running time of our algorithm readNumbers1 in lesson The need for asymptotic notation: a case study. In that lesson, we got the formula

$T_1(n) = \frac{c}2n^2 + (d-\frac{c}2)n + e$

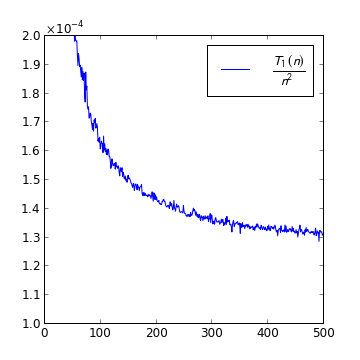

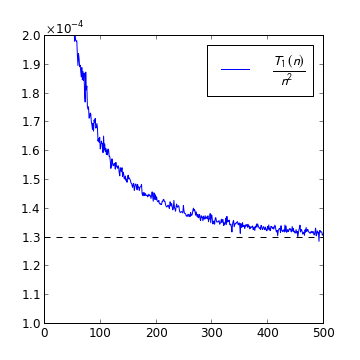

where $c$, $d$ and $e$ are some constants. The ratio is $\frac{T_1(n)}{n^2} = \frac{c}2 + (d-\frac{c}2)\frac1n + \frac{e}{n^2}$. Obviously we can make the last two terms as small as we like by choosing $n$ large enough. For example, there exist a number $n_0$ such that for all $n \geq n_0$, we have $(d-\frac{c}2)\frac1n < 0.1$ and $\frac{e}{n^2} < 0.1$. So $\frac{T_1(n)}{n^2} \leq \frac{c}2 + 0.2$ for sufficiently large $n$. Therefore, we have proven that $T_1 \in O(n^2)$. As the following diagram shows, measurements agree that ratio is indeed bounded: it appears that starting from $n \geq 50$ we have $\frac{T_1(n)}{n^2} < 2 \cdot 10^{-4}$.

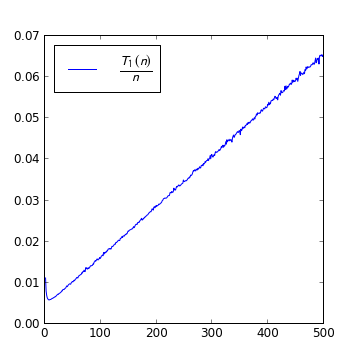

Now let's check whether $T_1(n) \in O(n)$. The ratio is $\frac{T_1(n)}{n} = \frac{c}2 n + (d-\frac{c}2) + \frac{e}{n}$. The first term grows to infinity as $n \to \infty$, the second term is a constant and the third one goes to $0$ as $n \to \infty$, therefore the whole sum grows to infinity as $n \to \infty$. So the ratio $\frac{T_1(n)}{n}$ is not bounded in the process $n \to \infty$ (for any $c$ and $n_0$ there exists $n \geq n_0$ such that $\frac{T_1(n)}{n} > c$) and therefore we have proven that $T_1(n) \not\in O(n^2)$. The following diagram shows that measurements agree with the analysis: the ratio appears not to be bounded by any number but grow linearly to infinity.

Actually, often we can make our life even easier using the concept of limit. (To recall the definition and properties of the limit of a function, see page Limit.) Namely, if the limit $\lim_{n \to \infty} \frac{f(n)}{g(n)}$ exists then the ratio $\frac{f(n)}{g(n)}$ is bounded for sufficiently large $n$ if and only if the limit is finite; so, in order to determine whether $f \in O(g)$, it suffices to calculate the limit of the ratio of the functions. For example, let's recheck the statements $T_1 \in O(n^2)$ and $T_1 \in O(n)$. Since $\lim \frac{T_1(n)}{n^2} = \lim \frac{c}2 + \lim (d-\frac{c}2)\frac1n + \lim \frac{e}{n^2} = \frac{c}2 + 0 + 0 = \frac{c}2 < \infty$ but $\lim \frac{T_1(n)}{n} = \lim \frac{c}2 n + \lim (d-\frac{c}2) + \lim \frac{e}{n} = \infty + (d-\frac{c}2) + 0 = \infty$, we can immediately conclude that the first ratio is bounded for sufficiently large $n$ but the second one is not; hence $T_1 \in O(n^2)$ but $T_1 \not\in O(n)$. Looking at the $\frac{T_1(n)}{n^2}$ ratio diagram once more, we notice that measurements agree with the analysis that the ratio converges: from the diagram it looks like $\lim \frac{T_1(n)}{n^2} \approx 1.3 \cdot 10^{-4}$.

So we found the following general criterion for determining whether $f \in O(g)$.

In some nasty cases, Criterion 2 cannot be applied since the limit does not exist. It turns out that an example is our function readNumbers2:

The diagram suggests that the ratio $\frac{T_2(n)}{n}$ does not converge to any particular number but keeps oscillating somewhere between 0.0021 and 0.0018; hence Criterion 2 cannot be used.3) Nevertheless, the ratio appears to be bounded for sufficiently large $n$ (the diagram suggests that $\frac{T_2(n)}{n} < 0.0021$ for $n > 150$) and this, by Criterion 1, means that $T_2 \in O(n)$. So this diagram agrees with our theoretical calculations in the first chapter that $T_2 \in O(n)$.

For most functions one encounters, however, the limit $\frac{f(n)}{g(n)}$ exists and Criterion 2 is useful.

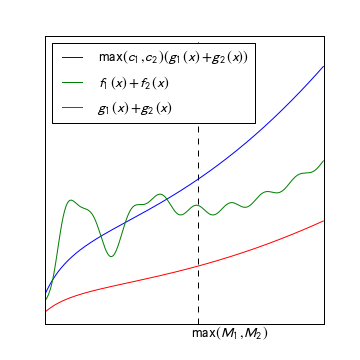

Lemma (Some properties of big Oh).

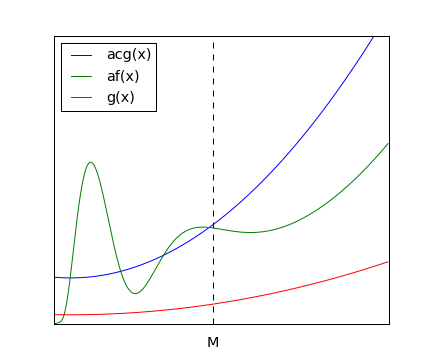

- If $f\in O(g)$ and $a > 0$ then $a\cdot f\in O(g)$. Proof

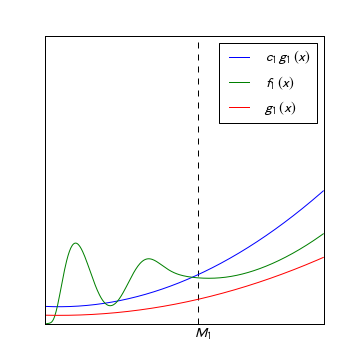

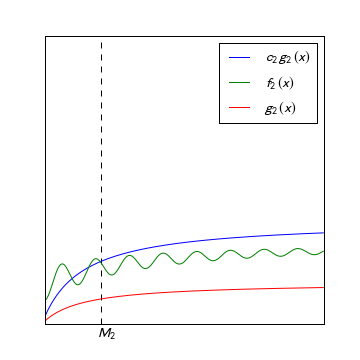

- If $f_1\in O(g_1)$ and $f_2\in O(g_2)$ then $f_1+f_2\in O(g_1+g_2)$. Proof

- If $f_1\in O(g_1)$ and $f_2\in O(g_2)$ then $f_1\cdot f_2\in O(g_1\cdot g_2)$.

- If $f_1,f_2\in O(g)$ then $f_1+f_2\in O(g)$.

- If $f_1 \in O(f_2)$ and $f_2\in O(g)$, then $f_1\in O(g)$.

- If $g_1 \in O(g_2)$, then $g_1+g_2 \in O(g_2)$.

- If $f\in O(g)$ and $\lim_{n \to \infty} b(n) = \infty$ then $\sum_{i=1}^{b(n)} f(i)\in O(\sum_{i=1}^{b(n)} g(i))$.

The following exercise demonstrates the power of asymptotic notation: using Big Oh estimates, one can get some idea about an algorithm's performance even if the exact expression for the running time is too difficult to calculate. (Of course, for some applications we might need the precise number of steps — in such cases, we cannot use asymptotic notation. But otherwise, asymptotics make life easier.)

Assume that you are analyzing the running time of an algorithm. Your analysis reveals:

- The subroutine $f(a)$ does at most $a^2$ multiplications of integers of length $a$.

- Besides that, $f(a)$ does some additional stuff. This additional stuff takes less time than $a$ multiplications, except if $a<a_0$ (due to some fixed overhead). $a_0$ is approximately $1000$, but you are not sure because computing the precise number would require much more work.

- The main program calls $f(a)$ for each $a=1,\dots,n^3$ and performs at most $n^5$ additional elementary operations.

- Multiplying integers of length $a$ takes time that is at most quadratic in $a$, up to some constant factor (i. e. at most $ka^2$ microseconds where $k$ is a constant).

Find a Big-Oh upper bound of the running time of the main program. More precisely, find a constant $d$ such that the program's running time is in $O(n^d)$; try to find the constant $d$ as small as possible. To justify your answer, use the properties stated in Lemma (Some properties of big Oh).

Hint. You may need the formula $\sum_{a=1}^{n^3} a^4=\frac{n^{15}}5+\frac{n^{12}}2+\frac{n^9}3-\frac{n^3}{30}$.

Lemma (Big Oh relations of some well-known functions).

- $p(n) \in O(n^k)$ if $p$ is a polynomial of degree $k$.

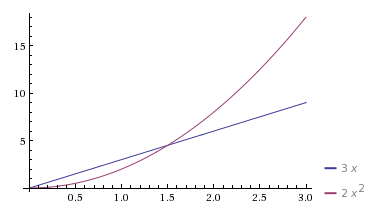

- $n^k \in O(a^n)$ for any constant real numbers $a > 1$ and $k > 0$.

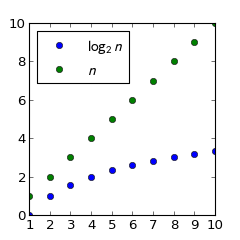

- $(\log_a n)^k \in O(n^l)$ for any constant real numbers $a > 1$, $k > 0$ and $l > 0$.

- $\log_a n \in O(\log_b n)$ for any constant real numbers $a > 1$ and $b > 1$.

- $a^n \in O(b^n)$ for any constant real numbers $b \geq a > 0$.

readNumbers1, which, with its quadratic running time, could within one second only handle inputs smaller than 3000 ($T_1(3000) \approx 1.3 \cdot 10^{-4} \cdot 3000^2 \text{ ms} \approx 1.2 \text{ s}$), and even if readNumbers1 ran 100 times faster than it actually does, it could handle in a second only inputs smaller than 30,000 (i. e., 10 times larger inputs than before, since the running time is quadratic and $10^2=100$). On the other hand, readNumbers2 with its linearly bounded running time is much faster, being able to handle $500,000$ elements in a second.